How To Scrape Data from Any Website: 5 Code and No-Code Methods

Unlock the secrets of effective web scraping. Dive into informative articles, tips, and tutorials on web data extraction. Increase your scraping skills with us.

.png)

5 min

A wealth of valuable data and information is stored on the websites. However, harnessing such data in a precise and efficient way might not be that simple. This is the area where web scraping tools come into play. However, if you are not interested in paying for such tools, we have rounded up the best 5 free web scraping methods.

So, without any further ado, let’s dive in!

{{blog_cta}}

What is Web Scraping?

Web scraping is a method to extract large amounts of data from a website. This type of data extraction is done using software. Being an automated process, web scraping tends to be an efficient way to extract large chunks of data in an unstructured or structured format.

Individuals and businesses use this data extraction method for various purposes including:

- Market research

- Lead generation

- Price monitoring

A specialized tool used for web scraping is referred to as a ‘Web Scraper’. It is designed to extract data quickly and accurately. The level of complexity and design of a web scraper might vary depending on the project.

Is Web Scraping Legal?

We usually come across a common question “Is web scraping legal?” The shortest and most precise answer is “Yes”. Web scraping is legal if you are extracting data from a publicly available website. On the other hand, it is imperative to understand that web scraping shouldn’t be done in a manner that raises any concerns about the extraction and usage of data.

Besides, there are certain laws that provide necessary guidelines regarding web scraping. These include:

- Computer Fraud and Abuse Act (CFAA)

- Digital Millennium Copyright Act

- Contract Act

- Data Protection Act

- Anti-hacking Laws

Why Web Scraping is Useful?

Scraping a website is getting more complicated with each passing day. However, with the availability of web scraping tools, it is a lot easier to extract large-scale data. So, whether you run a well-established business or still struggle to grow your business, web scraping can be more than helpful.

To help you understand why web scraping is so useful, we have briefly discussed some of its most prominent benefits.

1. Allows Generating Quality Leads

Lead generation tends to be a tiresome task. However, with web scraping, generating quality leads won’t take too long. With an efficient web scraping tool, you can scrape the most relevant data of your targeted audience.

For instance, you can scrape data by using various filters such as company, job title, education, and demography. Once you get the contact information of the target audience, it’s time to start your marketing campaign.

2. Offer More Value to Your Customer

Customers are always willing to pay more if a product offers more value. With web scraping, it is possible to improve the quality of your product or services. For this purpose, you need to scrape information about the customers and their feedback regarding the performance of your product.

3. Makes it Easy to Monitor Your Competitor

It is essential to monitor the latest changes made to your competitor’s website. This is the area where web scraping can be helpful. For example, you can monitor what types of new products your competitor has launched.

You can also get valuable insights regarding your competitor’s audience or potential customers. This allows you to carve a new market strategy.

4. Helps with Making Investment Decisions

Investment decisions are usually complex. So, you need to collect and analyze the relevant information before reaching a decision. For this purpose, you can take advantage of web scraping to extract data and conduct analysis.

What Data Can We “Scrape” from the Web?

Technically, you can possibly scrape any website available for public consumption. However, when taking into account the ethical or legal aspects, you can’t do it all the time. So, it would be appropriate to understand some general rules before performing web scraping.

Some of these rules include:

- Don’t scrape private data that needs a passcode or username to access.

- Avoid copying or scraping web data, which is copyrighted.

- You can’t scrape the data if is explicitly prohibited by ToS (Terms of Service).

Free Web Scraping Methods

Web scraping has been a popular source for valuable data extraction. In addition to paid web scraping tools, you can also take advantage of free scraping methods.

To help you with this, here are some of the methods that you can use depending on your data extraction needs:

1. Manual Scraping with Upwork and Fiverr

If you are interested in manual data scraping, you can hire a freelancer via popular freelancing platforms like Upwork and Fiverr. These platforms help you find a web scraping expert depending on your data extraction needs.

Both Upwork and Fiverr promote their top-rated freelancers. So, you can easily find a seasoned web scraper offering online services. You can even find local web scrapers using these platforms.

2. Python Library – BeautifulSoup

BeautifulSoup is a Python library, which allows you to scrape information from selected web pages. It uses XML or HTML parser and provides Pythonic idioms while searching, iterating, and modifying the parse tree. Using this library, you can extract data out of HTML and XML files.

You need a pip package to install BeautifulSoup on Linux or Windows. If you already own this package, just follow these simple steps:

Step 1: Open the command prompt in Python.

Step 2: Run this command and wait for the BeautifulSoup to install.

pip install beautifulsoup4Note: BeautifulSoup doesn’t parse documents. Hence, a parser library like “html5lib” or “lxml” is also installed through this command.

Step 3: This step involves the selection of a preferred parser library. You can choose from different options including html5lib, html.parser, or lxml.

Step 4: Verify the installation by implementing it with Python.

How to Scrape with BeautifulSoup

Below are the key steps to follow when scraping data with BeautifulSoup.

Step 1: Extracting the HTML using this request:

1#!/usr/bin/python3

2import requests

3

4url = 'https://www.scrapin.io'

5

6data = requests.get(url)

7

8print(data.text)Step 2: Extract the content from the HTML using this prompt.

1#!/usr/bin/python3

2

3import requests

4from bs4 import BeautifulSoup

5from pprint import pprint

6

7url = 'https://www.scrapin.io'

8

9data = requests.get(url)

10

11my_data = []

12html = BeautifulSoup(data.text, 'html.parser')

13articles = html.select('a.post-card')

14

15for article in articles:

16 title = article.select('.card-title')[0].get_text()

17 excerpt = article.select('.card-text')[0].get_text()

18 pub_date = article.select('.card-footer small')[0].get_text()

19 my_data.append({"title": title, "excerpt": excerpt, "pub_date": pub_date})Step 3: Save the above code in a file named fetch.py, and run it using the following code:

python3 fetch.py3. JavaScript Library - Puppeteer

Follow these steps to initialize your first puppeteer scraper:

Step 1: To start with, you need to create the first puppeteer scraper folder on your computer. You need to use mkdir for creating this example folder. Use this code:

mkdir first-puppeteer-scraper-exampleStep 2: Now, initialize the Node.js repository with a package.json file. Use the npm init command to initialize the package.json.

npm init –yStep 3: Once you have typed this command, you should come across this package.json file.

1{

2 "name": "first-puppeteer-scraper-example",

3 "version": "1.0.0",

4 "main": "index.js",

5 "scripts": {

6 "test": "echo \"Error: no test specified\" && exit 1"

7 },

8 "keywords": [],

9 "author": "",

10 "license": "ISC",

11 "dependencies": {

12 "puppeteer": "^19.6.2"

13 },

14 "type": "module",

15 "devDependencies": {},

16 "description": ""

17}Step 4: Here, you need to install the Puppeteer library. Use this command to install Puppeteer.

npm install puppeteerStep 5: After Puppeteer library installed you can scrape any web page using JavaScript

1const puppeteer = require('puppeteer');

2

3async function scrapeWebsite(url) {

4 // Launch the browser

5 const browser = await puppeteer.launch();

6 const page = await browser.newPage();

7

8 // Navigate to the URL

9 await page.goto(url);

10

11 // Scrape data - as an example, let's scrape the title of the page

12 const pageTitle = await page.evaluate(() => {

13 return document.title;

14 });

15

16 console.log(`Title of the page is: ${pageTitle}`);

17

18 // Close the browser

19 await browser.close();

20}

21

22// Replace 'https://example.com' with the URL you want to scrape

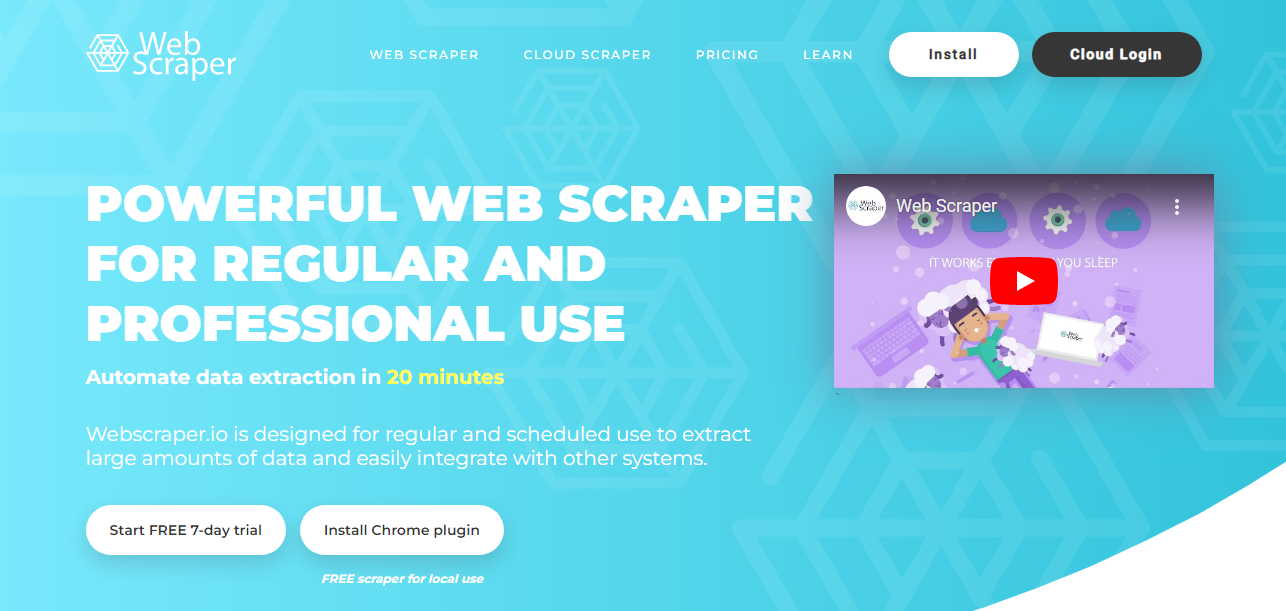

23scrapeWebsite('https://example.com');4. Web Scraping Tool - Webscraper

You can scrape the web by using Webscraper. Just follow these steps:

Step 1: Install the Webscraper extension from Chrome and open it.

Step 2: Open the site that you want to scrape and create a sitemap. Next, you need to specify multiple URLs with ranges.

For the examples listed below, you can use a range URL like “http://example.com/page/[1-3]”.

For the links listed below, use a range URL with zero padding like “http://example.com/page/[001-100]”.

For the link examples provided below, use a range URL with increment like “http://example.com/page/[0-100:10]”

Step 3: Once you have created a site map, the next step is to create selectors. These selectors are added in a tree-like structure.

Step 4: The next step involves inspection of the selector tree. For this purpose, you need to inspect the Selector graph panel.

Step 5: With this, you are all set to scrape your desired web page. Just open the Scrape panel and start web scraping.

5. Web Scraping API – ScraperApi

Web scraping is easy with ScraperAPI. This API is created for hassle-free integration and customization. To enable JS rendering, IP geolocation, residential proxies, and rotating proxies, just add &render=true, &country_code=us or &premium=true.

Below are the steps to follow when you want to use ScraperAPI with the Python Request library:

Step 1: Send requests to ScraperAPI using the API endpoint, Python SDK, or proxy port. Here is the code example:

1import requests

2

3from bs4 import BeautifulSoup

4

5list_of_urls = ['http://quotes.toscrape.com/page/1/', 'http://quotes.toscrape.com/page/2/']

6

7NUM_RETRIES = 3

8

9scraped_quotes = []

10

11for url in list_of_urls:

12

13 params = {'api_key': API_KEY, 'url': url}

14

15 for in range(NUMRETRIES):

16

17 try:

18

19 response = requests.get('http://api.scraperapi.com/', params=urlencode(params))

20

21 if response.status_code in [200, 404]:

22

23 ## escape for loop if the API returns a successful response

24

25 break

26

27 except requests.exceptions.ConnectionError:

28

29 response = ''

30

31 ## parse data if 200 status code (successful response)

32

33 if response.status_code == 200:

34

35 """

36

37 Insert the parsing code for your use case here...

38

39 """

40

41 ## Example: parse data with beautifulsoup

42

43 html_response = response.text

44

45 soup = BeautifulSoup(html_response, "html.parser")

46

47 quotes_sections = soup.find_all('div', class_="quote")

48

49 ## loop through each quotes section and extract the quote and author

50

51 for quote_block in quotes_sections:

52

53 quote = quote_block.find('span', class_='text').text

54

55 author = quote_block.find('small', class_='author').text

56

57 ## add scraped data to "scraped_quotes" list

58

59 scraped_quotes.append({

60

61 'quote': quote,

62

63 'author': author

64

65 })

66

67print(scraped_quotes)Step 2: Configuring your code to automatically catch and retry failed requests returned by ScraperAPI. For this purpose, use the code example provided below.

1import requests

2

3from bs4 import BeautifulSoup

4

5list_of_urls = ['http://quotes.toscrape.com/page/1/', 'http://quotes.toscrape.com/page/2/']

6

7NUM_RETRIES = 3

8

9scraped_quotes = []

10

11for url in list_of_urls:

12

13 params = {'api_key': API_KEY, 'url': url}

14

15 for in range(NUMRETRIES):

16

17 try:

18

19 response = requests.get('http://api.scraperapi.com/', params=urlencode(params))

20

21 if response.status_code in [200, 404]:

22

23 ## escape for loop if the API returns a successful response

24

25 break

26

27 except requests.exceptions.ConnectionError:

28

29 response = ''

30

31 ## parse data if 200 status code (successful response)

32

33 if response.status_code == 200:

34

35 """

36

37 Insert the parsing code for your use case here...

38

39 """

40

41 ## Example: parse data with beautifulsoup

42

43 html_response = response.text

44

45 soup = BeautifulSoup(html_response, "html.parser")

46

47 quotes_sections = soup.find_all('div', class_="quote")

48

49 ## loop through each quotes section and extract the quote and author

50

51 for quote_block in quotes_sections:

52

53 quote = quote_block.find('span', class_='text').text

54

55 author = quote_block.find('small', class_='author').text

56

57 ## add scraped data to "scraped_quotes" list

58

59 scraped_quotes.append({

60

61 'quote': quote,

62

63 'author': author

64

65 })

66

67 print(scraped_quotes)Step 3: Scale up your scraping by spreading your requests to multiple concurrent threads. You can use this web scraping code.

1import requests

2

3from bs4 import BeautifulSoup

4

5import concurrent.futures

6

7import csv

8

9import urllib.parse

10

11API_KEY = 'INSERT_API_KEY_HERE'

12

13NUM_RETRIES = 3

14

15NUM_THREADS = 5

16

17## Example list of urls to scrape

18

19list_of_urls = [

20

21 'http://quotes.toscrape.com/page/1/',

22

23 'http://quotes.toscrape.com/page/2/',

24

25 ]

26

27## we will store the scraped data in this list

28

29scraped_quotes = []

30

31def scrape_url(url):

32

33params = {'api_key': API_KEY, 'url': url}

34

35 # send request to scraperapi, and automatically retry failed requests

36

37 for in range(NUMRETRIES):

38

39 try:

40

41 response = requests.get('http://api.scraperapi.com/', params=urlencode(params))

42

43 if response.status_code in [200, 404]:

44

45 ## escape for loop if the API returns a successful response

46

47 break

48

49 except requests.exceptions.ConnectionError:

50

51 response = ''

52

53 ## parse data if 200 status code (successful response)

54

55 if response.status_code == 200:

56

57 ## Example: parse data with beautifulsoup

58

59 html_response = response.text

60

61 soup = BeautifulSoup(html_response, "html.parser")

62

63 quotes_sections = soup.find_all('div', class_="quote")

64

65 ## loop through each quotes section and extract the quote and author

66

67 for quote_block in quotes_sections:

68

69 quote = quote_block.find('span', class_='text').text

70

71 author = quote_block.find('small', class_='author').text

72

73 ## add scraped data to "scraped_quotes" list

74

75 scraped_quotes.append({

76

77 'quote': quote,

78

79 'author': author

80

81 })

82

83with concurrent.futures.ThreadPoolExecutor(max_workers=NUM_THREADS) as executor:

84

85 executor.map(scrape_url, list_of_urls)

86

87print(scraped_quotes)Limit of Web Scraping

Before you go ahead and start web scraping, it would be appropriate to learn about the limitations you might face. Here are a few of the most prominent limitations of web scraping:

- Due to the dynamic nature of websites, it is hard for web scrapers to extract required data by applying predefined logic and patterns.

- The use of heavy JavaScript or AJAX by a website also makes web scraping more challenging.

- Also, the anti-scraping software prevents scrapers from extracting data using specific IP addresses.

How to Protect Your Website Against Web Scraping?

If you don’t like others to scrape your website’s data, we have got you covered. For your assistance, we have created a list of ways to protect your website against web scraping.

These include:

- Control the visits of scrapers by setting limits on connections and requests.

- Hide the valuable data by publishing it in the form of an image or flash format. This will prevent scraping tools from accessing your structured data.

- Use Javascript or cookies to verify that the visitor aren’t scraping tools or web scraping applications.

- You can also add Captchas to ensure that only humans visit your site.

- Identify and block scraping tools and traffic from malicious sources.

- Don’t forget to update the HTML tags frequently.

Final Thoughts

The availability of free web scraping methods and tools can open up new opportunities for businesses with limited budgets. As a result, you can access valuable data associated with your target audience. Each of the methods provided above has its strengths and weaknesses. However, it’s up to you to choose a perfect data collection process depending on your web scraping needs.

.png)

Real-Time B2B Data for AI Products

Get structured public data on professionals and organizations, refreshed continuously and built for scale.

.png)

.png)

You Build AI Products, We Provide B2B Data

Focus on building your product—we handle the data layer. Our scalable solution eliminates the pain of managing scraping in-house, so you can move faster without compromise.